To prevent unauthorized domains from using your search engines, access is restricted by default so only whitelisted domains can use them.

Authorized Domains

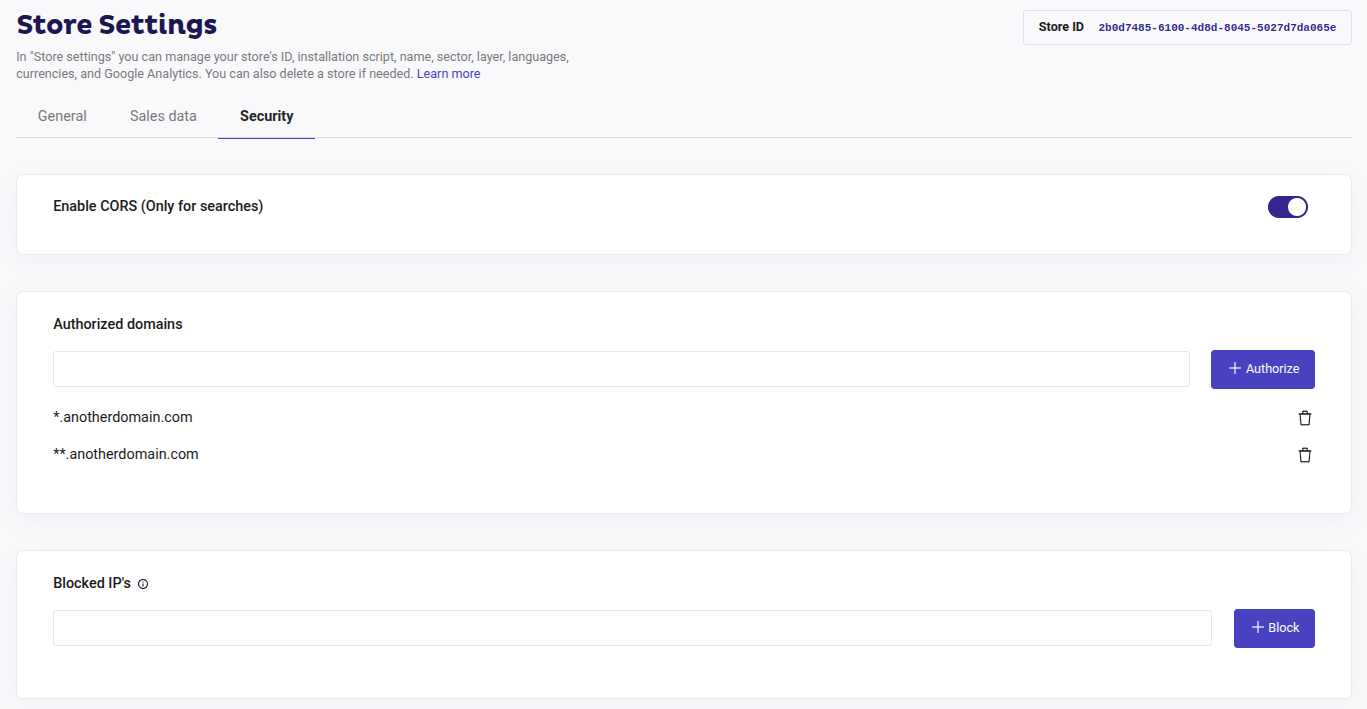

Doofinder uses CORS (Cross Origin Resource Sharing) to protect your search engines. This means that only whitelisted domains can perform requests client-side to your search engines.

To configure which domains you want to grant client-side access to a search engine, go to your Admin Panel > Configuration > General Settings > Security > Authorized domains . Select the Store and Search Engine for which you want to configure domains from the top menu.

Doofinder enables your main domain by default and *.doofinder.com too so you can use search from the Control Panel. That's why you usually have to do nothing to have Doofinder working in your own site.

Say you own a Search Engine for an eCommerce website accessible at https://www.mysafeshop.com. In the Domain Security screen you will probably see how *.mysafeshop.com is whitelisted inside Authorized Domains.

If you want your users to search in www.mysafeshop.com from another site, for instance https://www.myfriendsshop.com you will have to authorize that domain by adding a new entry to Authorized Domains. Just enter www.myfriendsshop.com and save.

How Patterns Work

Websites use domain names, and domain names have subdomains: www.doofinder.com is a subdomain of doofinder.com.

To prevent big lists of authorized domains you can use patterns with wildcards to match, for instance, any subdomain of a domain.

Say you have also a blog accessible at blog.mysafeshop.com. You can grant access to your Search Engine from both www and blog by using a wildcard: *.mysafeshop.com.

If you have inner subdomains, you can repeat the pattern: *.*.mysafeshop.com.

If you use your Layer in different pages with multiple inner subdomains, you can match all of them with the double asterisk wildcard (**) like in: **.mysafeshop.com.

Blocked IPs

You can also manage access to your Search Engine by blocking specific IP addresses or entire IP ranges using network notation. This is a great way to prevent unwanted access, for example, to prevent bot attacks from public IPs, to easily stop any puntual attack from a specific IP, or to block your company’s public IP to avoid employees using up search requests unnecessarily.

Remember to review blocked IPs frequently because IPs in the internet change, so you may be blocking a valid IP at some time.

How to Block IPs

Follow this step-by-step guide to block IPs:

- Identify the IP address. In the case that you want to block your IP, use a site like What's my IP to know your IP address.

- Go to your Doofinder Admin Panel, and navigate to Configuration > General Settings > Security > Blocked IPs > add the IPs to block.

Once added, Doofinder will no longer be triggered for those specific IP addresses.

Review blocked IPs frequently, if an IP is not static you would need to update the blocked list accordingly, either to unblock a valid IP or to block a new non-static IP.

Bot Attacks

What are bot attacks? Bot attacks are an increasing number of visits to your website performed by robots, which are abnormally higher compared to a real person's visit. When it comes to humans, we’re talking about 5 to 10 page clicks, while a bot can produce hundreds or more visits in a short period of time.

Please note that not all bots are bad, especially those originating from larger search engines such as Google, which provides positive customer referrals. Those that come from smaller entities are the root cause of the problem since they are more frequent, poor in value, and because they are looking for rapid growth for their database. In sum, they are feeding from your data and are not returning any referrals.

If you would like to know how to prevent it, please check the Security Section of our FAQs.